December 17, 2017

OCI and AWS 10Gbit network test

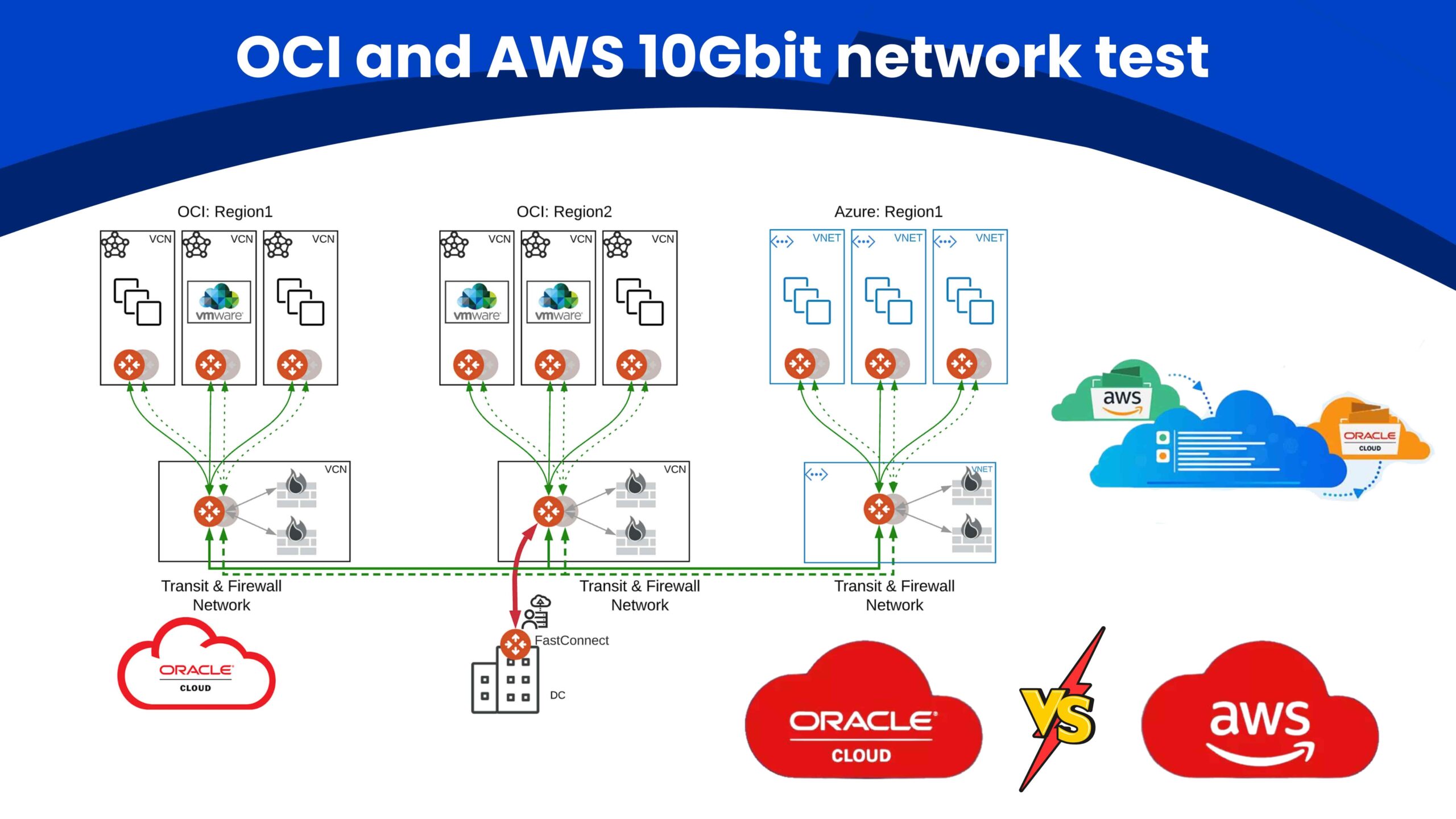

n this blog we will compare the network performance of instances in OCI and AWS.

The Network is a sensitive resource in multi-tenant environments, and is usually one of the first contributors leading to diminished performance when the environment is oversubscribed.

In AWS there is a concept of Placement groups. Network performance is optimized between instances belonging to the same Placement group. But the downside of having instances in one and the same placement group is that it limits the amount of resources available for each instance. Therefore if a big cluster has to be created, there is a good chance that resource limitations for specific instance types will be reached.

In Oracle Cloud infrastructure this problem does not exist. For OCI’s flat network the concept of Placement groups is not really applicable because the whole AD is basically one large placement group in itself.

To cover as much as possible the following tests have been performed:

- OCI: network test between 2 instances, Same AD

- AWS: network test between 2 instances in same Placement group, same AZ

- AWS: network test between 2 instances in different Placement group, same AZ

- OCI: network test between 2 instances, different AD

- AWS: network test between 2 instances, different AZ

We measured network latency and throughput, with all tests running 10GBit instances.

Instances in same AD/AZ belong to same subnet.

We use uperf as a benchmarking tool. It is one of the most common tools available on the market for network performance testing.

I. Pick instance shapes

Used cheapest AWS 10Gbit instance: c4.8xlarge (60.0 GiB Memory, 36 vCPUs):

24*30*1.59$/hr = $1144.8 /month.

On OCI the cheapest 10Gbit instance was used: BM.Standard1.36 (256GB Memory, 36 OCPU):

24*30*36*0.0638$/(OCPU*hour) = $1653.69/month

There is no exact match on shapes between the 2 vendors. But as far as network testing chosen instances can be considered equivalent: Memory would not make a difference. Both shapes have enough CPU power to load 10Gbit.

Baremetal vs VM does not make a big difference either as 10Gbit AWS shapes has SR-IOV support.

SR-IOV enables network traffic to bypass the software switch layer of virtualization stack.

As a result, the I/O overhead in the software emulation layer is diminished and achieves network performance that is nearly the same performance as in non-virtualized environments.

II. Install benchmarking tools

RHEL 7.4 is OS on AWS, and OEL 7.4 was picked on OCI.

Install uperf. It has to be installed on target and destination instances.

[ec2-user@ip-10-10-5-145 ~]$ sudo yum install git

[ec2-user@ip-10-10-5-145 ~]$ sudo yum install gcc

[ec2-user@ip-10-10-5-145 ~]$ sudo yum install autoconf

#run below step only if rpm is missing. Find rpm in public repo

[ec2-user@ip-10-10-5-145 ~]$ sudo yum install /tmp/lksctp-tools-devel-1.0.17-2.el7.x86_64.rpm

[ec2-user@ip-10-10-5-145 ~]$ git clone https://github.com/uperf/uperf

[ec2-user@ip-10-10-5-145 ~]$ cd uperf

[ec2-user@ip-10-10-5-145 ~]$ touch aclocal.m4 configure Makefile.am Makefile.in

[ec2-user@ip-10-10-5-145 uperf]$ mkdir /tmp/uperf1

[ec2-user@ip-10-10-5-145 uperf]$ export PATH=/tmp/uperf1:$PATH

[ec2-user@ip-10-10-5-145 uperf]$ ./configure --prefix=/tmp/uperf1

[ec2-user@ip-10-10-5-145 uperf]$ make

[ec2-user@ip-10-10-5-145 uperf]$ sudo make install

Same test for instance in OCI.

III. Test

1. OCI: network test between 2 instances, Same AD

First is ping test, to get a feel of latency for decent size payload.

[opc@net-test-ad1-1 uperf]$ ping -c 50 -s 1400 -i 0.2 -q 10.0.0.14

PING 10.0.0.14 (10.0.0.14) 1400(1428) bytes of data.

--- 10.0.0.14 ping statistics ---

50 packets transmitted, 50 received, 0% packet loss, time 9799ms

rtt min/avg/max/mdev = 0.153/0.178/0.225/0.024 ms

Ping test may be not very accurate as ICMP traffic can be de-prioritize in some networks. It gives rough numbers, but will not use it for comparison.

Uperf does not have this problem as it generates TCP traffic.

Uperf test.

Run uperf in slave mode on destination. Also for duration of this test stop firewall if it blocks needed ports.

[opc@net-test-ad1-2 uperf]$ sudo systemctl stop firewalld.service

[opc@net-test-ad1-2 uperf]$ uperf -s

Run uperf latency test on source:

Use all default settings in netperf.xml

[opc@net-test-ad1-1 uperf]$ export h=10.0.0.14

[opc@net-test-ad1-1 uperf]$ export proto=TCP

[opc@net-test-ad1-1 uperf]$ cat workloads/netperf.xml

<?xml version="1.0"?>

<profile name="netperf">

<group nthreads="1">

<transaction iterations="1">

<flowop type="accept" options="remotehost=$h protocol=$proto

wndsz=50k tcp_nodelay"/>

</transaction>

<transaction duration="30s">

<flowop type="write" options="size=90"/>

<flowop type="read" options="size=90"/>

</transaction>

<transaction iterations="1">

<flowop type="disconnect" />

</transaction>

</group>

</profile>

[opc@net-test-ad1-1 uperf]$ uperf -m workloads/netperf.xml -a -e -p

Starting 1 threads running profile:netperf ... 0.00 seconds

Txn1 0 / 1.00(s) = 0 1op/s

Txn2 72.09MB / 30.23(s) = 20.00Mb/s 27781op/s

Txn3 0 / 0.00(s) = 0 0op/s

-------------------------------------------------------------------------------------------------

Total 72.09MB / 32.34(s) = 18.70Mb/s 25975op/s

Group Details

-------------------------------------------------------------------------------------------------

Group0 144.18M / 31.23(s) = 38.72Mb/s 53782op/s

Strand Details

-------------------------------------------------------------------------------------------------

Thr0 72.09MB / 32.24(s) = 18.76Mb/s 26055op/s

Txn Count avg cpu max min

-------------------------------------------------------------------------------------------------

Txn0 1 1.28ms 0.00ns 1.28ms 1.28ms

Txn1 419963 71.45us 0.00ns 2.81ms 61.32us

Txn2 1 36.66us 0.00ns 36.66us 36.66us

Flowop Count avg cpu max min

-------------------------------------------------------------------------------------------------

accept 1 1.28ms 0.00ns 1.28ms 1.28ms

write 419963 2.34us 0.00ns 92.21us 1.68us

read 419962 68.99us 0.00ns 2.81ms 6.51us

disconnect 1 31.75us 0.00ns 31.75us 31.75us

Netstat statistics for this run

-------------------------------------------------------------------------------------------------

Nic opkts/s ipkts/s obits/s ibits/s

ens2f0 12990 12990 16.24Mb/s 16.21Mb/s

lo 192 192 397.42Kb/s 397.42Kb/s

-------------------------------------------------------------------------------------------------

Run Statistics

Hostname Time Data Throughput Operations Errors

-------------------------------------------------------------------------------------------------

10.0.0.14 32.34s 72.09MB 18.70Mb/s 839928 0.00

master 32.34s 72.09MB 18.70Mb/s 839928 0.00

-------------------------------------------------------------------------------------------------

Difference(%) -0.00% 0.00% 0.00% 0.00% 0.00%

Run uperf throughput test on source:

For throughput test we bumped wndsz 10 times from default in iperf.xml. This is needed, as with higher latencies, and specific configurations of TCP sliding window, RTT could be a bottleneck. With hight wndsz truly maximum throughput can be checked. These settings were used in all of our tests.

[opc@net-test-ad1-1 uperf]$ export nthr=8

[opc@net-test-ad1-1 uperf]$ uperf -m workloads/iperf.xml -a -e -p

[opc@net-test-ad1-1 uperf]$ cat workloads/iperf.xml

<?xml version="1.0"?>

<profile name="iPERF">

<group nthreads="$nthr">

<transaction iterations="1">

<flowop type="connect" options="remotehost=$h protocol=$proto

wndsz=500k tcp_nodelay"/>

</transaction>

<transaction duration="30s">

<flowop type="write" options="count=10 size=8k"/>

</transaction>

<transaction iterations="1">

<flowop type="disconnect" />

</transaction>

</group>

</profile>

[opc@net-test-ad1-1 uperf]$ uperf -m workloads/iperf.xml -a -e -p

Starting 8 threads running profile:iperf ... 0.00 seconds

Txn1 0 / 1.00(s) = 0 8op/s

Txn2 34.47GB / 30.23(s) = 9.79Gb/s 149434op/s

Txn3 0 / 0.00(s) = 0 0op/s

--------------------------------------------------------------------------------------------------

Total 34.47GB / 32.33(s) = 9.16Gb/s 139718op/s

Group Details

--------------------------------------------------------------------------------------------------

Group0 3.45GB / 31.23(s) = 947.93Mb/s 144644op/s

Strand Details

--------------------------------------------------------------------------------------------------

Thr0 4.31GB / 32.23(s) = 1.15Gb/s 17539op/s

Thr1 4.30GB / 32.23(s) = 1.15Gb/s 17500op/s

Thr2 4.31GB / 32.23(s) = 1.15Gb/s 17519op/s

Thr3 4.32GB / 32.23(s) = 1.15Gb/s 17559op/s

Thr4 4.30GB / 32.23(s) = 1.15Gb/s 17504op/s

Thr5 4.31GB / 32.23(s) = 1.15Gb/s 17512op/s

Thr6 4.30GB / 32.23(s) = 1.15Gb/s 17491op/s

Thr7 4.31GB / 32.23(s) = 1.15Gb/s 17526op/s

Txn Count avg cpu max min

--------------------------------------------------------------------------------------------------

Txn0 8 446.91us 0.00ns 587.04us 384.51us

Txn1 451763 531.73us 0.00ns 2.61ms 8.74us

Txn2 8 8.30us 0.00ns 9.99us 6.09us

Flowop Count avg cpu max min

--------------------------------------------------------------------------------------------------

connect 8 446.44us 0.00ns 586.65us 384.02us

write 4517570 53.16us 0.00ns 2.61ms 8.69us

disconnect 8 8.00us 0.00ns 9.65us 5.73us

Netstat statistics for this run

--------------------------------------------------------------------------------------------------

Nic opkts/s ipkts/s obits/s ibits/s

ens2f0 134770 35059 9.23Gb/s 18.54Mb/s

lo 192 192 401.81Kb/s 401.81Kb/s

--------------------------------------------------------------------------------------------------

Run Statistics

Hostname Time Data Throughput Operations Errors

--------------------------------------------------------------------------------------------------

10.0.0.14 32.33s 34.46GB 9.16Gb/s 4517171 0.00

master 32.33s 34.47GB 9.16Gb/s 4517594 0.00

--------------------------------------------------------------------------------------------------

Difference(%) -0.00% 0.01% 0.01% 0.01% 0.00%

As we can see network latency is 71.45us and throughput is 9.79Gb/s. Number are within SLA limits.

2. AWS: Network test between 2 instances in one Placement group, same AZ

Ping test.

[ec2-user@ip-10-10-5-145 uperf]$ ping -c 50 -s 1400 -i 0.2 -q 10.10.6.238

PING 10.10.6.238 (10.10.6.238) 1400(1428) bytes of data.

--- 10.10.6.238 ping statistics ---

50 packets transmitted, 50 received, 0% packet loss, time 9799ms

rtt min/avg/max/mdev = 0.108/0.158/0.214/0.029 ms

Uperf test.

Here only the essential part of output is listed, to keep content short.

Run uperf latency test:

[ec2-user@ip-10-10-5-145 uperf]$ export h=10.10.6.238

[ec2-user@ip-10-10-5-145 uperf]$ export proto=TCP

[ec2-user@ip-10-10-5-145 uperf]$ uperf -m workloads/netperf.xml -a -e -p

…

Txn Count avg cpu max min

--------------------------------------------------------------------------------------------------

Txn0 1 5.48ms 0.00ns 5.48ms 5.48ms

Txn1 423172 70.79us 0.00ns 3.43ms 56.18us

Txn2 1 58.23us 0.00ns 58.23us 58.23us

Run uperf throughput test:

[ec2-user@ip-10-10-5-145 uperf]$ uperf -m workloads/iperf.xml -a -e -p

Starting 8 threads running profile:iperf ... 0.00 seconds

Txn1 0 / 1.00(s) = 0 8op/s

Txn2 34.44GB / 30.23(s) = 9.79Gb/s 149333op/s

Txn3 0 / 0.00(s) = 0 0op/s

--------------------------------------------------------------------------------------------------

Total 34.44GB / 32.33(s) = 9.15Gb/s 139622op/s

Network latency is 70.79us and throughput is 9.79Gb/s. Numbers are within SLA limits.

This is not bad at all.

Confirm what SR-IOV is enabled:

[ec2-user@ip-10-10-5-145 ~]$ ethtool -i eth0

driver: ixgbevf ---<<-------- ixgbevf used

version: 3.2.2-k-rh7.4

firmware-version:

expansion-rom-version:

bus-info: 0000:00:03.0

supports-statistics: yes

supports-test: yes

supports-eeprom-access: no

supports-register-dump: yes

supports-priv-flags: no

These test results appear to confirm the following theory: When AWS Placement group is enforced, performance of 10Gbit OCI Baremetal shape is basically identical to 10Gbit AWS VM shape.

3. AWS: network test between 2 instances in different Placement group, same AZ

Ping test.

[ec2-user@ip-10-10-5-145 uperf]$ ping -c 50 -s 1400 -i 0.2 -q 10.10.3.181

PING 10.10.3.181 (10.10.3.181) 1400(1428) bytes of data.

--- 10.10.3.181 ping statistics ---

50 packets transmitted, 50 received, 0% packet loss, time 9802ms

rtt min/avg/max/mdev = 0.191/0.336/2.551/0.456 ms

Uperf test.

Run uperf latency test:

[ec2-user@ip-10-10-5-145 uperf]$ export h=10.10.3.181

[ec2-user@ip-10-10-5-145 uperf]$ export proto=TCP

[ec2-user@ip-10-10-5-145 uperf]$ uperf -m workloads/netperf.xml -a -e -p

…

Txn Count avg cpu max min

--------------------------------------------------------------------------------------------------

Txn0 1 605.02us 0.00ns 605.02us 605.02us

Txn1 152148 197.06us 0.00ns 10.92ms 146.15us

Txn2 1 40.05us 0.00ns 40.05us 40.05us

Run uperf throughput test:

[ec2-user@ip-10-10-5-145 uperf]$ uperf -m workloads/iperf.xml -a -e -p

Starting 8 threads running profile:iperf ... 0.00 seconds

Txn1 0 / 1.00(s) = 0 8op/s

Txn2 29.10GB / 30.23(s) = 8.27Gb/s 126164op/s

Txn3 0 / 0.00(s) = 0 0op/s

--------------------------------------------------------------------------------------------------

Total 29.10GB / 32.33(s) = 7.73Gb/s 117959op/s

Key numbers are highlighted in bold. All numbers will be summaraized in the comparison table at the end of this blog.

4. OCI: network test between 2 instances, different AD

Ping test.

[opc@net-test-ad1-1 uperf]$ ping -c 50 -s 1400 -i 0.2 -q 10.0.1.5

PING 10.0.1.5 (10.0.1.5) 1400(1428) bytes of data.

--- 10.0.1.5 ping statistics ---

50 packets transmitted, 50 received, 0% packet loss, time 9800ms

rtt min/avg/max/mdev = 0.706/0.724/0.786/0.018 ms

Uperf test.

Run uperf latency test:

[opc@net-test-ad1-1 uperf]$ export h=10.0.1.5

[opc@net-test-ad1-1 uperf]$ export proto=TCP

[opc@net-test-ad1-1 uperf]$ uperf -m workloads/netperf.xml -a -e -p

…

Txn Count avg cpu max min

--------------------------------------------------------------------------------------------------

Txn0 1 1.89ms 0.00ns 1.89ms 1.89ms

Txn1 43090 696.85us 0.00ns 1.42ms 679.91us

Txn2 1 16.21us 0.00ns 16.21us 16.21us

Run uperf throughput test:

[opc@net-test-ad1-1 uperf]$ uperf -m workloads/iperf.xml -a -e -p

Starting 8 threads running profile:iperf ... 0.00 seconds

Txn1 0 / 1.00(s) = 0 8op/s

Txn2 34.46GB / 30.23(s) = 9.79Gb/s 149424op/s

Txn3 0 / 0.00(s) = 0 0op/s

--------------------------------------------------------------------------------------------------

Total 34.46GB / 32.33(s) = 9.16Gb/s 139708op/s

5. AWS: network test between 2 instances, different AZ

Ping test.

[ec2-user@ip-10-10-5-145 uperf]$ ping -c 50 -s 1400 -i 0.2 -q 10.10.2.207

PING 10.10.2.207 (10.10.2.207) 1400(1428) bytes of data.

--- 10.10.2.207 ping statistics ---

50 packets transmitted, 50 received, 0% packet loss, time 9801ms

rtt min/avg/max/mdev = 0.638/0.678/0.741/0.038 ms

Uperf test.

Run uperf latency test:

[ec2-user@ip-10-10-5-145 uperf]$ export h=10.10.2.207

[ec2-user@ip-10-10-5-145 uperf]$ export proto=TCP

[ec2-user@ip-10-10-5-145 uperf]$ uperf -m workloads/netperf.xml -a -e -p

…

Txn Count avg cpu max min

--------------------------------------------------------------------------------------------------

Txn0 1 2.67ms 0.00ns 2.67ms 2.67ms

Txn1 46138 650.44us 0.00ns 201.36ms 542.42us

Txn2 1 22.06us 0.00ns 22.06us 22.06us

Run uperf throughput test:

[ec2-user@ip-10-10-5-145 uperf]$ uperf -m workloads/iperf.xml -a -e -p

Starting 8 threads running profile:iperf ... 0.00 seconds

Txn1 0 / 1.00(s) = 0 8op/s

Txn2 17.57GB / 30.23(s) = 4.99Gb/s 76208op/s

Txn3 0 / 0.00(s) = 0 0op/s

--------------------------------------------------------------------------------------------------

Total 17.57GB / 32.33(s) = 4.67Gb/s 71252op/s

IV. Conclusion

Below is a summary of our obtained results:

| Env | Test description | Latency | Throughput |

|---|---|---|---|

| OCI | Test between two instances, Same AD | 71.45us | 9.79GB/s |

| AWS | Test between two instances, in same Placement group, same AZ | 70.79us | 9.79GB/s |

| AWS | Test between two instances, in different Placement group, same AZ | 197.06us | 8.27GB/s |

| OCI | Test between two instances, different AD | 696.85us | 9.79GB/s |

| AWS | Test between two instances, different AZ | 650.44us | 4.99GB/s |

The configuration of AWS with the Placement group applied performed very similar to OCI running two instances in the same AD.

Removing the AWS placement group increased latency significantly and shaved off about 10% of throughput.

Cross AZ/AD traffic added more latency, which was expected, but cross AZ also decreased

throughput in AWS – which was rather un-expected. Potential causes for this could be examined in a future blog.

More News